An Index of Bio x AI

Perspectives from Alphafold to Agents

Introduction

This Substack began in 2023 as an attempt to synthesize my evolving thoughts on the intersection of AI and the life sciences. With readership growing across disciplines, this post serves as a chronological index and reflection—highlighting key themes, recurring hype cycles, and lessons learned from building systems at this nexus.

The primary style has been “semi-technical.” Given how rare cross-training remains at this intersection, the goal has been to write in a way that’s accessible to diverse audiences—without becoming overly pedantic or overly simplified. It revisits several topical issues and inflection points from recent years, offering contemporaneous perspectives that may still hold up over time. It also documents my progress in exploring how systems at the intersection of AI and biology are actually built.

The topics are presented chronologically, from earliest to most recent, to reflect the evolution of ideas. Brief descriptions are included for interested readers to take a deeper dive. If you find this outline or these articles useful, please consider subscribing and sharing.

For more information: jasonsteiner.xyz

2023 — Foundation Models & Scale

Foundation models were a major topic in 2023, albeit much less so than today. Alphafold and its derivatives promised that such transformative models could be built for all aspects of biology. Every new publication was touted as a 'foundation model,' which was widely misunderstood. Today, there are many examples of complex deep neural networks applied to biological data sets, none of which approaches the generality or utility of the protein language models for very clear reasons. The complexity, diversity, data availability, and task specificity of most of biology is not nearly as robust. We have many good models for many specific things, but very few that may be rightfully considered foundation models.

This article is a primer for biologists to think about data in a structured way. One pertinent aspect of AI is how it assesses data. In the life sciences, data is mostly considered as a means of answering a specific question or hypothesis. In AI, data is mostly considered from the perspective of its relationships and distribution. This article is intended to bridge that gap.

In the early days of this current AI paradigm, benchmarks were the coin of the realm. Every paper and every company touted their performance on every possible benchmark. Benchmarks became out of date faster than they could be created — we are now even proposing Humanity’s Last Exam1— yet very little of it seemed to matter in practice. This article is about the practical experience of commercializing transformative products, where every competing product is head and shoulders above the prior technology. When a field dramatically changes and you find yourself not selling the magnitude of the change but rather the minutiae of the benchmark, you are losing. When you have a knife fight in a phone booth, it doesn’t matter if you win; everyone gets cut.

In the first wave of AI hype, scale was the driving factor. The bitter lesson was driving tens of billions of dollars into scaling model parameters. Since then, this has abated somewhat as scaling laws evolved from brute force in training to test-time compute and the need for massive scale required for commercial inference. But the issue of scale still remains, and this article provides a perspective on the relationship between model scale, data content, and model training. It presents an idea of “impedance matching” of data input to model parameters as a framework for understanding training, overfitting, and performance.

This two part series was drafted in the context of the first FDA approved CRISPR based therapy Casgevy for sickle cell disease and traces the history of the discovery of the genetic mechanism of sickle cell disease over several decades, the evolution of biological tools and data acquired during that time, and how AI as applied to genomics is now poised to transform our understanding of genetic disease and, coupled to advances in molecular tools like CRISPR, our ability to cure them.

In late 2023, there was turmoil at OpenAI when Sam Altman was fired, which appeared to have its roots in the rumor that they had achieved AGI. This was, apparently, not true, but the underlying technology that they were pursuing, namely the combination of generative AI and reinforcement learning, is the foundation of a new scaling paradigm that is the basis of reasoning models today. This article presaged the release of the “strawberry” or “o-series” of models from OpenAI and described how they likely worked, before they were released.

2024 — Reasoning & Applications

Following the article AI Mathematicians and Scientists and the paradigm of combining generative AI with RL, an immediate question was whether this paradigm would be applicable to biological problems. For reasoning models that are based on language, LLMs are powerful (and often accurate) generators of synthetic data that can be effectively infinite. This is why current reasoning models have been so successful. Synthetic data is a powerful tool for training models; however, it has limitations when it comes to biological systems, which make it less effective. This article covers those limitations.

Following the previous articles on the idea, or myth, of AGI and the power of foundation models to generalize, this article is about what deep learning models may actually be capable of. A lot of the discussion at the time was about whether models can “extrapolate beyond their training data”, meaning they can learn to generalize their predictive or generative capacities to unseen data domains, and they would still be valid. This idea is at the core of AGI, specifically the “general” aspect of it. One of the challenges in describing this effect was even being clear about what was inside or outside of the training distribution. The phrase “generalize outside of distribution” became a meaningless talking point in many cases. This article provides some context for how to think about those topics.

Virtual cells were recently rebranded with the Arc Institute's Virtual Cell Challenge2, but the idea isn't new. This two-part series outlines some of the ideas involved in creating cell simulations using deep learning. They put into context the development of various foundation models in biology, as well as developments in joint embeddings and world models like JEPA. Virtual cells are, in some sense, like AGI in that they are not precisely defined but highly desirable. These articles focus primarily on eukaryotic cells, which are considerably more complex than, for example, bacterial cell models, which are common in the synthetic biology domain. Currently, most implementations of virtual cell are somewhat academic, and many independent evaluations of published models report that most architecturally sophisticated models do not meaningfully outperform much simpler linear models. The challenges with virtual cells are both data-bound and complexity-bound. It should be expected that cellular systems are meta-stable in general (i.e., cells can differentiate but end up at relatively fixed points), so one might assume there is a lower-dimensional space than just pure data collection, but the challenge remains considerable.

This article is an experiential tutorial on building RAG systems for research. One of the amazing things about AI developments is the empowerment of individuals to learn and build useful things. This article provides a step-by-step process for creating a RAG system on BioRxiv publications. Building sophisticated RAG systems is a complex engineering effort, and an article may be forthcoming on how these systems can be optimized with techniques like vector caching and database sharding to accelerate and scale retrieval. It’s been a fascinating experience to see how much can be developed.

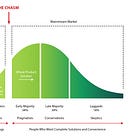

In the context of the previous article on building RAG systems and how quickly and rapidly prototypes and applications can now be built, at least in software, this article shifts the focus to what that actually means from a product perspective. In a world where anything can be built quickly, what actually wins? This article draws from my commercial experience introducing transformative technologies to the market and how those commercial and competitive landscapes have played out over time. It is perhaps the most important strategic primer for any builder in the AI space.

It is a very common refrain in the life sciences that we lack sufficient data for effective deep learning. In many respects, this is true. Biological data has historically been generated for the purposes of answering a specific biological hypothesis, not in a large-scale manner that focuses on metadata and distributions required to train deep neural networks. Many life science companies, however, are raising substantial venture funding to build data platforms and train AI models. The most important question for these companies is what they should expect from the data they generate. Deep neural networks are trained by a form of data compression, and the outstanding question for any data generation platform is what the underlying data compressibility actually is.

2025 — AI Scientists & Agents

AI for Science is an amalgamation of two pretty undefined terms, but it is one of the most active vectors of research in the field right now. Broadly, this article breaks down the utility of AI into two domains - a “circuit search” domain and a “policy search” domain. Circuit search is the focus on training AI models as function approximators — namely, to predict and generate accurate representations of the world. This domain is heavily reliant on accurate biological data. Foundation models are in the circuit search category. Policy search is the focus on learning what to do — this can be thought of as a hypothesis or planning domain, and is the realm of reasoning models. The convergence of these domains is where AI for Science is most powerful.

There has been an expectation in Silicon Valley that there will be a single-founder unicorn company - that is, a company valued at $1B with only a single person leveraging AI. This is quite an audacious claim, yet it is striking how quickly and how efficiently some AI companies have scaled, and it is not uncommon to reach these valuations now with just a few dozen people. The idea of AI systems running an organization autonomously is, in some respects, frightening and in others amazing. There is always a latency to real-world adoption of new technologies, and it will be the case with AI as well; however, the leverage that will be possible as the technologies become more refined and sophisticated opens up very interesting possibilities.

This article is a breakdown of some of the inner structures of how AI Scientists are built. It looks at some of the code from two open-source models from Sakana.ai and AI2, and outlines the logic of the thinking, reflection, and action processes, as well as how tools are constructed and used. This article is best for readers who want an overview of how “scientific agents” are built.

This article demonstrates what biomedical agents are capable of, drawing from my own development efforts. OpenAI’s recent system card3 on ChatGPT agents, which focused heavily on biosafety, echoed many of the same observations. While these agents can achieve near-perfect accuracy on tasks like LabBench benchmarks4, they also exhibit hallucination risks—fabricating data when uncertain. Designing robust agents requires not just tool integration but also safeguards that prevent silent failure from becoming silent fabrication. For the moment, at least a degree of biological judgment and understanding will still be required to evaluate the outputs of biological agents, but this is a rapidly advancing space.

This article covers recent publications and techniques on training reasoning models such as those that may be employed in the IMO competitions, as well as evolutionary models like AlphaEvolve and the Darwin Godel Machines and how such systems can be engineered into self improving AI systems.

Concluding Notes

The throughline of this Substack has been centered on the impact of AI in the life sciences. The articles outlined above are a subset of that investigation. Some additional topics that may be of interest are:

If you have found them useful or interesting, please consider subscribing and sharing with others.

References

https://lastexam.ai/

https://www.cell.com/cell/fulltext/S0092-8674(25)00675-0

https://cdn.openai.com/pdf/839e66fc-602c-48bf-81d3-b21eacc3459d/chatgpt_agent_system_card.pdf

https://github.com/Future-House/LAB-Bench