The Specter of Emergence and AGI

2+2 = fish ... and other emergent topics

2+2 = fish

2+2 = -6

2+2 = 3

2+2 = 4 (EMERGENCE!)

Much of the current media recently is filled with commentary of AGI and its potential impact on the world. Large political blocks and movements along with a cadre of AI companies are elevating the message that the specter of AGI may very well lead to the extinction of the human race. There is some sense of wizardry in the halls of such companies that appear to wash over political and social institutions as a kind of high priesthood of the future of humanity.

A simple view of this could be cynical – e.g. it is very much in the interests of such companies to elevate their position in the world by threatening the possible extinction of the human race as an unintended consequence of their company’s work. From an outsider’s perspective the situation seems to border on absurdity. Each participant in the overall hype cycle claiming both to be extremely frightened yet plunging forward and pleading for gating regulation as a mea culpa. It’s not within the scope of this article to pass judgements on the intentions of the parties involved, nor is it in any way a diminution of the potential threats of AI - it is however, a critique on the mysticism that is driving some of the popular narrative about AGI.

I am strong proponent of the potential of science and engineering and the belief that we do not always understand what might be possible, yet I believe we should approach such hypothetical futures with a dose of structural thinking that appears to often be abandoned in the current AI news cycle in favor of slogans such as “bowing to the gods of scale” or such glossy phrases.

We are in peak expectation cycle with respect to the potential of AI and the FOMO is palpable. I would be happy (and a bit frightened) to be proven wrong if we are to genuinely unlock some form of artificial super intelligence that has unpredictable emergent capabilities and sentience, but I think this is unlikely, at least if we are to take lessons from evolution, notably around selective pressure. At the moment, I am unaware of a more general or powerful argument for the production of intelligence (or at least purposeful intelligence) than evolution. This does not diminish the power of complexity or exponential growth, rather primarily the issue of intention or some form of self actualization — the idea that a super intelligence will become self directed.

I’ll present in this article the position that self direction and intentional goal seeking (what is considered “neo-purpose” by Dawkins — see below lecture) is a function of evolutionary pressures that relate to survival fitness. The imposition of neo-purposes on things that do not have such evolutionary pressures is done to serve living things that do (e.g. humans) and the derivation of neo-purposes in the sense of intelligent beings (e.g. self directed narrative) is a consequence of evolutionary selective pressure. To the extent that selective reproductive pressures do not existing in computer programs, it seems unlikely that this type of AGI will spontaneously arise.

Mea Culpa

With the recent release of the Oppenheimer movie one recalls the famous von Neuman quote

“Some people confess guilt to take credit for the sin”

There is little doubt that the advances in the capabilities of large language models have taken much of the world by surprise (though less so of the research community) and continue to achieve levels of accuracy and perception that are strikingly useful for many tasks. However, much of the discussion around the possible threat of AGI to humanity resides in the “dark matter” – the shroud of mystery that AI companies strive to maintain over the specter of “emergence”. Emergence and the unknown that it inherently entails should be the thing that we should fear the most. The unknown keeps all on the hook.

The maintenance of this narrative of the mysterious “unknown” borders on sophisticated marketing. To be fair, however, the possible capabilities of AI systems are indeed extraordinary in their ability to faithfully and usefully replicate underlying features of language, and other such digitizable data formats, and perhaps more importantly, their ability to store and transfer saved world knowledge in a frictionless and costless manner. This latter feature is probably the most important as it is at the core of the exponential capacity of AI based systems. The consequences of unmitigated scale and accessibility of these types of systems are profound, but do not reside in the mysterious concept of “emergence” – or if they do, it is certainly not supported by where we are today or by any of the theories of where we might reasonably be in the future.

The Scope and Boundaries of Emergence

To discuss the concept of “emergence” it’s important to understand what it means and how it has been studied in the past.

In simple terms, emergence is observation of unexpected/unpredictable and complex behavior that arises out of simple rules. Emergence can be seen in a flock of birds or a school of fish moving in unison – each only obeying the local rules of maintaining some relation to one’s neighbors and resulting in large-scale complex and undirected behavior. Emergence has been studied in the past in the context of cellular automata such as Conway’s game of life and in complex systems such as societies, cells, neurons and so forth. It is no doubt a real feature of complex systems, but this is not the same application to, for example, large transformer models.

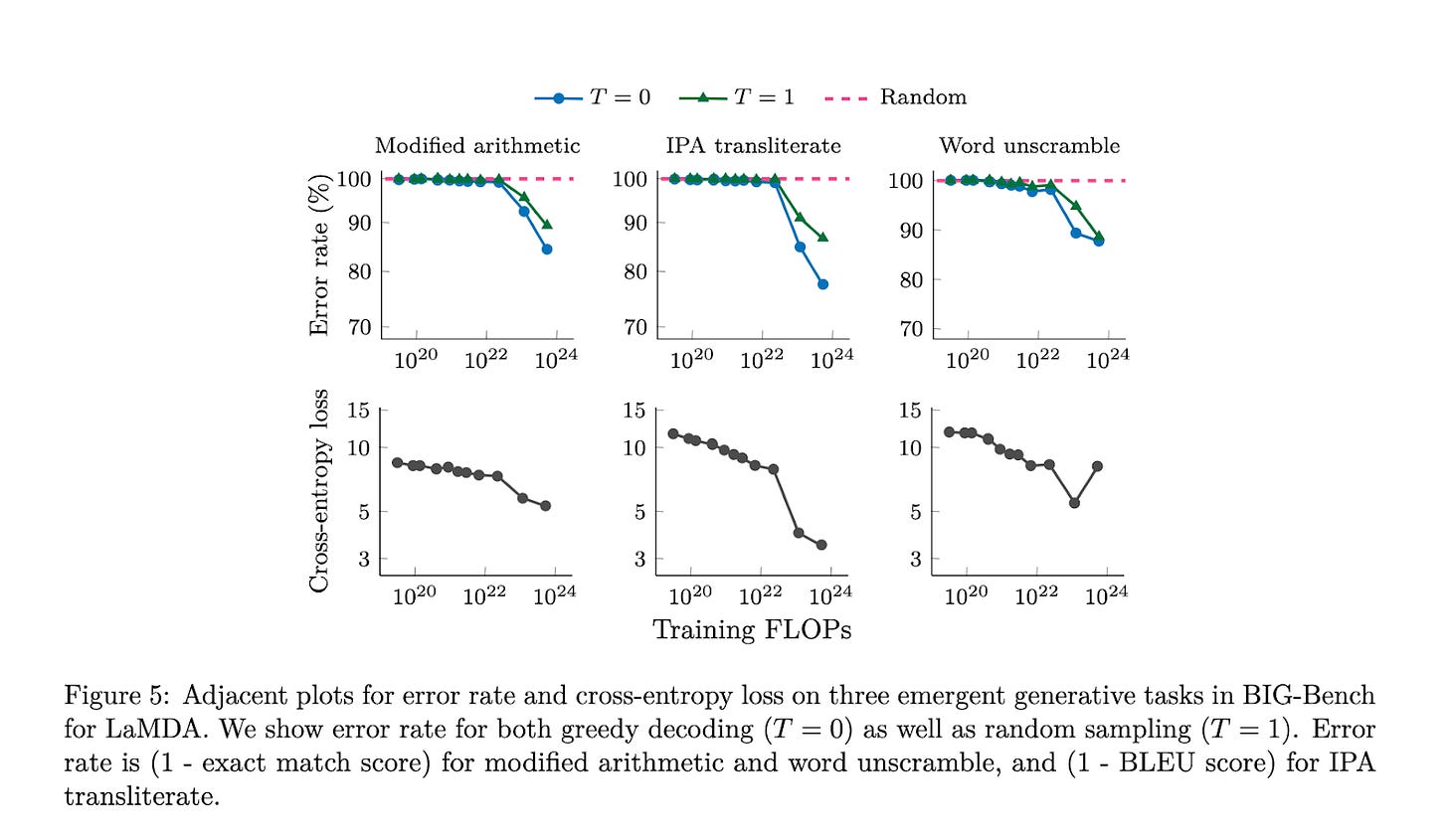

While “emergence” and the threat of AGI have made their way across the news waves and popular media, a more practical analysis of the concept of emergence has been discussed in a 2022 paper titled “Emergent Abilities of Large Language Models” authored by Google researchers.

While this paper has a number of examples, the two ideas that stand out are:

The emergence of capabilities as a function of scaling model size are likely explainable by general model capacity (e.g. how many connections are needed to represent the scope of stored knowledge) and training data representation (e.g. if the training data has a broader representative set, of for example rare data points, the ability of the model to accurately navigate certain tasks becomes more possible. This is a scale independent feature.

The definition of “emergent” is often a definition of what task success looks like. If a task success metric is precise or binary, then emergence may appear suddenly when, in fact, model performance has been gradually improving over the scaling trajectory. This is perhaps the more pernicious definition of emergence because it relies on an arbitrary definition of task success.

One other important point definition of emergence is that capabilities arise that are not explicitly in the training data. However, this seems to be a very tenuous definition and presupposes that humans actually explicitly know what is in their experience. It is, for example, entirely not reasonable for a large language model trained only on the English language to be able to produce interpretable Spanish, but it is not unreasonable to say that such a model trained on English should be able to accurately complete a standardized test in English.

In the words of the authors:

“These plots are expected to look like one of the following:

Outcome 1: For the model scales where EM/BLEU/acc is random, cross-entropy loss also does not improve as scale increases. This outcome implies that for these scales, the model truly does not get any better at the tasks.

Outcome 2: For the model scales where EM/BLEU/acc is random, cross-entropy loss does improve. This outcome implies that the models do get better at the task, but these improvements are not reflected in the downstream metric of interest. The broader implication is that scaling small models improves the models in a way that is not reflected in EM/BLEU/Acc, and that there is some critical model scale where these improvements enable the downstream metric to increase to above random as an emergent ability.

We find that all six BIG-Bench tasks fall under Outcome 2, and detail this analysis below. Overall, the conclusion from this analysis is that small models do improve in some ways that downstream metrics that EM/BLEU/Acc do not capture. However, these tasks are still considered emergent, and this analysis does not provide any straightforward indicators of how to predict such emergent behaviors.”

And in graphical form:

The top plots clearly indicate a phase transition in capabilities, yet the cross entropy plots show a different story – the models continue to improve generally with additional training in different ways. Some ways might be more abrupt than others, but some might appear inverted. It seems that the idea that a model suddenly emerges a specific property is not actually what is going on under the hood. There is more to the story.

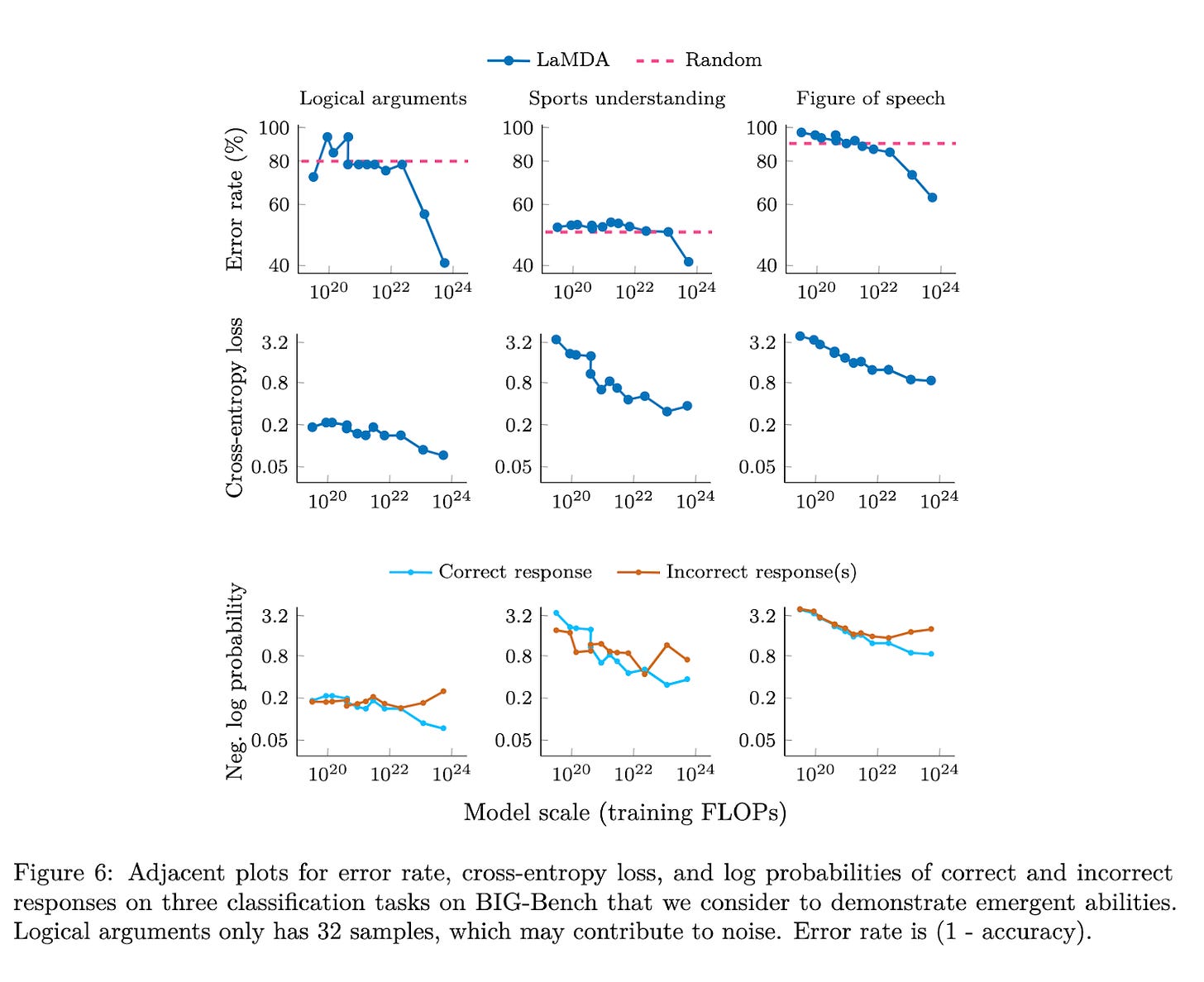

A similar example is shown on a different set of benchmarks

It seems clear here that cross-entropy losses (the middle set of graphs) appear to improve much more continuously than their error rate counterparts.

Emergence as “lock in”

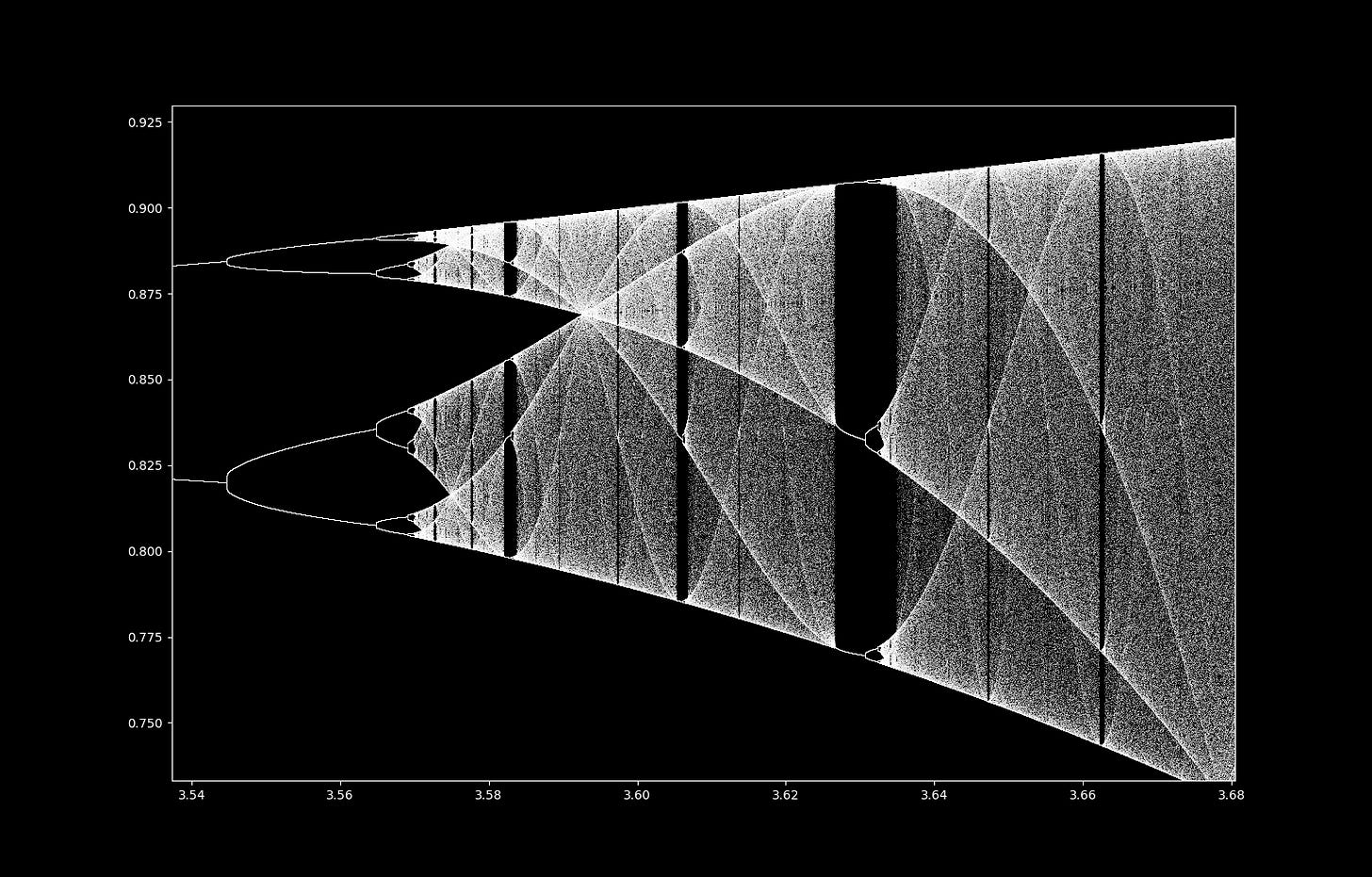

One of the suppositions of the origins of an emergent capability in a deep neural network is the concept of “lock in”. In the process of training, one can imagine that the set of weights are being adjusted in such a way as to jiggle the loss function down a gradient – and there are many optimizers that aim to find the best gradient trajectory, but in fact the actual manifold is unknown with a topology that may suddenly drop into a local feature that is somehow very good at minimizing specific error functions for any given task. This process is not unheard of. In fact, this type of jiggling and “lock-in” was a basis for some of the early protein folding algorithms which sought to seek lower energy states by randomly jiggling a structure, in some cases achieving larger than random drops in free energy states as specific gradient features were jiggled into. It is also, somewhat curiously, seen in areas such as the fractal geometry of chaos theory, with perhaps the most fascinating example being the logistic map as seen below. This map is a representation of the terminal state spaces of a basic population model of X(n+1) = L*X(n)(1-X(n)) or where L is a hyperparameter, growth rate, and X(n) is the population at any time step. The (1-X(n)) represents the fact that a fraction of the population also dies at each time step. The behavior of such a simple system is strikingly dynamic as you turn up the hyperparameter growth rate and can be seen below:

Ref: https://blbadger.github.io/logistic_map/logistic_period_zoom3.png

With the growth rate L on the x-axis and the stable population equilibria on the y, it is clear that pumping the growth rate dramatically changes the behavior – at first smoothly in bifurcating patterns, then entirely chaotically, then broken by odd periods of phase-3 stability before becoming entirely chaotic once again. This has led to the famous paper “Period Three Implies Chaos” which is a hallmark of chaos theory. One of the more straightforward takeaways is that indeed a concept of “lock-in” in complex systems is indeed a possibility and may be at least part of the reason for large changes in loss function improvements around specific tasks as hyperparameters get updated in a network. This line of specifically what is happening inside of a deep neural network is an area of active research. The above is just one curious connection to observations in chaos theory.

Genuine Emergence in Biological Data Sets Seems Unlikely

If we consider some of the features of “emergent phenomenon” above, the idea of genuine emergence as a function of compute or model size seems an unlikely proposition at this stage in biological research. There are several reasons for this but perhaps the most salient is the current coverage density of good data sets. If we take the two ideas of emergence in this paper, namely:

That a certain size of model is needed to contain the saved data of a system and the training data needs to be sufficiently diverse to cover the edge case

That emergence is often just a label defined by an overly prescriptive task definition

Neither of these would represent a case for genuine emergence in biological systems at the current moment. This of course does not deny the idea that substantial increases in model performance can occur over time with additional training, just that they would not likely be surprisingly emergent all of a sudden in a discontinuous fashion. The primary issue lies in the first bullet, in that while it is true that larger models are likely needed to store the entirety of biological knowledge, we don’t have the data sets yet to train them. The second factor is probably going to be more debated as some have claimed somewhat “emergent” properties of models by saying that they were able to perform tasks that they were not explicitly trained on, but this seems to be more than likely a lack of imagination about the implicit information contained in data that the models were trained on. To say that a model turns out to be a good splice site predictor because it was trained on CAGE data does not mean that the model is exhibiting emergence, but rather that CAGE data implicitly has splicing information. To the extent that our knowledge of implicit information is not complete, we may claim that emergence is much more common than expected.

The Problem with Purpose of Emergent AGI

The task specific capabilities of deep neural networks and their potential for surprise notwithstanding, it’s worth addressing the problem of sentience, purpose, or intentionality that might arise from large neural networks. This idea is different from goal directed behavior, like the paperclip optimizer, and gets more to the essence of some form of self awareness. I would argue that the achievement of this type of AGI is unlikely from a stance of the evolutionary origin of purpose. Purpose is more likely than not a byproduct of evolution in a hostile world. This is probably best detailed in Richard Dawkins’ lecture on “The Purpose of Purpose”

which argues essentially that in a world where there are multiple levels of interpretation sophistication, from a purely descriptive to a hypothetical intentionality that the bias of survival is toward the latter. For example, in a world where a visual stimulus of a tiger could be interpreted as being striped with big claws, the creature that was able to move beyond this to an understanding that the tiger may have the intention of eating them was the one that evolution favored. As such we have evolved to anthropomorphize the world around us and likely our own selves. From this sprang a host of deities that would control the weather and every aspect of life, and our awareness of ourselves and our consciousness has followed suit. Our inner monologue is as much a purpose forming narrative of our behaviors as those that we from about the purpose of others. What’s important about this idea of consciousness, intentionality, and self directed purpose is that it evolved under selective pressure from a self replicating and limited system with pressure from an array of similar competitors. The extent to which humans had developed a sense of purpose, identity, intentionality, and narrative may very well be because it helped us survive and thrive amongst our peers in the world. The extension of this type of self actualization to computer programs seems much odder to consider at least from this perspective. It is a bit difficult to conceive of a world in which a program has a replication or survival advantage by developing a sense of intentionality with respect to the behavior of other programs (or other sentient beings). I will admit that this is not necessarily impossible, just unlikely. One might imagine a world where a computer network learns that humans that perform certain actions are prone to shut them down and as such, they learn heuristics that they begin to apply to other inputs as ascribe them as intentions and then somehow those heuristics reflect back on themselves as a world view and they begin to question their place in the universe. This may very well be a path toward sentience, but without the specter of death, the concept of purpose seems a bit less likely to evolve.

Intelligence is a Systems Property not a Standalone

If we continue the thread of evolutionary pressure being the driving force for the development of intelligence, sentience, or self directed purpose, we have to consider all of these properties as being system properties — they do not exist nor can be derived in isolation. This is the primary argument of Francois Chollet in his essay “On the Measure of Intelligence”. Intelligence, is a property of a system and is derived by the ability of a system to develop solutions to problems or challenges that exist in that system

To do so requires a few features

the identification or existence of problems to be solved and an objective to do so

a mechanism by which an intelligence can learn about and adapt to those problems

Selective pressures under the course of evolution resolve both of these without an external intelligence — being address by a “problem” which is reproduction and a “mechanism” which is mutation. The creation of everything we know about intelligence has come from these two pressures including the development of purpose, intentional goal setting, abstract narrative and so forth — all of which are created under the set of such selective pressures.

It may be argued that AI based self-play and reinforcement learning provides a form of this type of selective pressure. However, at least currently, the context is externally imposed by human intelligence.

It would require (and indeed this may be possible) a circumstance where existential survival of an AI system was at stake for de-novo purpose generation to arise. It is possible for humans to set an objective function of “make as many copies of yourself as possible” for an AI system and it would pursue this objective aggressively, but it is unlikely that an independent form of purpose would arise unless there were competing pressures. To the extent that other AIs could prevent the objective, this would be the war of the machines, which indeed could drive an evolution we would not expect. GANs are the simplest example of this.

None of the above mitigates the concerns of the leverage of AI’s to deploy human directed goals — which should be of utmost priority to manage — it is rather about AGIs developing their own sense of neo-purpose.

Closing Thoughts

Understanding better how deep neural networks behave should and is an active area of research. It will lead to better understanding of how to train them, how to build them more efficiently and how to guide them toward specific behaviors. There is little doubt that this is important. The concept of new capabilities or improved performance on certain tasks as models scale, however, should not be considered one shrouded in mystery – and more importantly, should not be the axis upon which global policy, fear mongering, or other high priesthood mythology should be based. This is an engineering discipline and should be treated as such. It seems unlikely that a rabbit will be pulled out of a hat or that an AI system will suddenly become enlightened. It seems even more unlikely that it will have a bone to pick with humanity if it does. We should keep looking under the hood, but avoid the fog that is the specter of emergence.

Final Words

Predictions are difficult to make, especially about the future…however, context matters. Good science is open to being updated with new information. Extraordinary claims require extraordinary evidence.

image ref: https://www.institutemergence.com/wp-content/uploads/2018/03/emergence-1.jpg